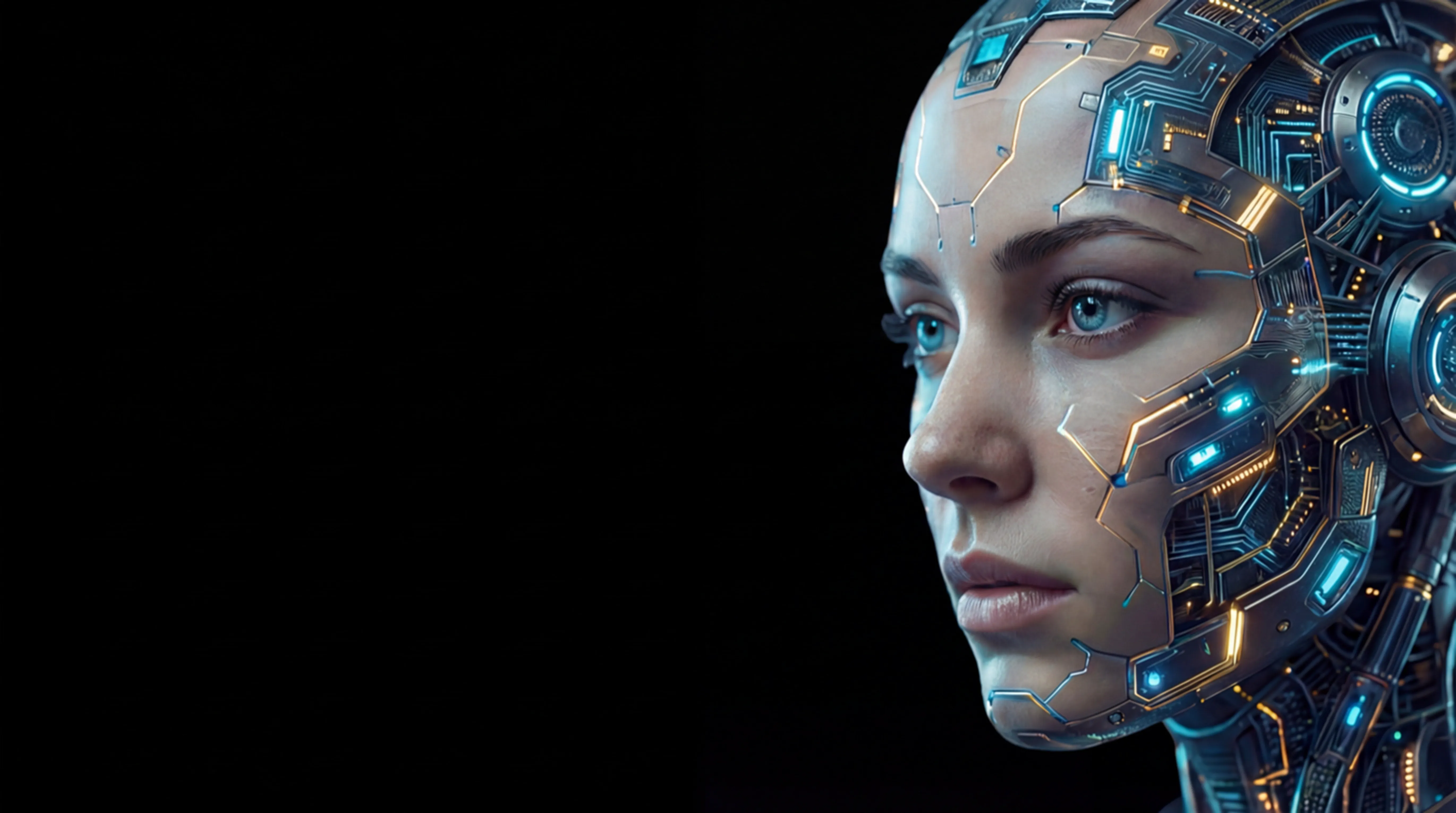

Erasing the lines between technology and people

We develop technologies that act like humans. Think like humans.

Our goal is a new kind of human–machine bond

The future of AI isn’t utility. It`s accessibility.

We build AI software that works in the real world—reliable, secure, and ready to scale. We combine strong engineering with practical machine learning to turn data into products: from intelligent automation and decision systems to custom copilots and analytics platforms. We move fast, stay transparent, and ship solutions that integrate cleanly with your business.

The future of AI isn’t utility. It`s accessibility.

We engineer connection — from deep tech to daily routine

Our KappaDelta LLM core is Built on Advanced AI Memory Systems

- Our solutions are production-ready, secure, scalable, and maintainable. They are KPI-driven, ROI-focused, outcome-based, and transparent. They are API-first, modular, compatible, and fast to deploy.

Designed to Be Part of Your Daily Life

Our AI is built to integrate into daily life, supporting tasks, habits, and routines.

It collects input, delivers relevant responses, and maintains consistent context over time.

Based on Principles That

Protect and Empower

Hey… you there?

Always. What kept you up this late?

Couldn’t sleep. My head’s full of stuff — work, deadlines, the usual chaos.

Sounds like your mind’s still in “problem-solving” mode.

Want me to help you slow it down?

The future of AI isn’t utility. It`s accessibility.

Meet The Team

The great minds behind our work

ML engineers

AI Model Development Experts

Our ML engineers design, train, and deploy machine learning models that power production-grade AI products. They work across the full lifecycle—data preparation, feature engineering, model selection, fine-tuning, evaluation, and optimization for latency and cost. We build robust training and inference pipelines with monitoring, retraining strategies, and quality controls to keep performance stable over time. The team focuses on measurable accuracy, reliability, and safety, using best practices in MLOps to move models from research to scalable systems. The result is AI technology that is technically sound, maintainable, and ready for real-world workloads.

Prompt engineers

LLM Behavior & Prompt OpS

Prompt engineers design and optimize the instruction layer that governs how language models reason, respond, and follow policy. They build reusable prompt architectures, tool-calling workflows, and guardrails that produce consistent outputs across tasks and channels. We evaluate prompts with structured test suites, edge-case scenarios, and quality metrics to reduce hallucinations and improve reliability. The team works closely with ML and product teams to translate business requirements into precise, controllable model behavior. The result is AI functionality that is predictable, safe, and tuned for real production use.

Applied AI Research & Analytics

Data scientists and applied AI researchers

Our data scientists and applied researchers turn business problems into measurable AI objectives and validated model solutions. They design experiments, build baselines, and develop evaluation frameworks to compare approaches using clear metrics. The team explores modeling strategies—from classical ML to modern LLM methods—and identifies the most effective path for accuracy, latency, and cost. They also analyze data quality, labeling strategy, and distribution shifts to reduce risk in production. The output is research-backed AI that is evidence-driven, reproducible, and ready to be engineered into scalable products.

DevOps

Reliable AI Infrastructure & Operations

Cloud, DevOps, and SRE team builds and operates the infrastructure that keeps AI systems fast, stable, and cost-efficient. They implement infrastructure-as-code, container orchestration, and secure networking to support training workloads and low-latency inference. The team sets up observability—logs, metrics, tracing, and alerting—to detect quality regressions and operational issues early. They manage CI/CD pipelines, release processes, scaling strategies, and disaster recovery to ensure predictable deployments. The result is production-grade AI availability and performance under real-world load.

Our technologies

Programming languages

We choose languages that match the job—rapid experimentation for AI research, high performance for production, and long-term maintainability.

Cloud services

Our infrastructure is cloud-native by design—secure, elastic, and optimized for compute-heavy AI workloads across major providers.

Web services

We build APIs and web services that are stable, well-documented, and easy to integrate for internal or customer-facing products.